I wanted to share a debate that I had outside of CF.

Olle Häggström is a mathematician, a professor, and an AI safety proponent.

Häggström has written some books on science. His latest book is Here Be Dragons: Science, Technology and the Future of Humanity (2016).

On his blog I asked him about some criticism that Elliot has written regarding AI safety.

He didn’t seem interested in really looking into stuff such as that if Popper is right then he and a lot of others are “… wasting their careers, misdirecting a lot of donations, incorrectly scaring people about existential dangers, etc.” as Elliot writes in AGI Alignment and Karl Popper.

In our discussion Häggström claimed that he had a rebuttal of BoI / DD. He would not post it or give a summary at first:

Deutsch’s point is so weak (basically, what happens is that he is so seduced by his own abstractions that he simply forgets about more down-to-earth issues) that my rebuttal not worth repeating.

I got his e-book and looked up what he wrote. I posted it in the discussion below (comment “2 januari 2023 kl. 19:07”).

Below is the entire discussion. I am “Anonym”.

A link to the discussion: https://haggstrom.blogspot.com/2022/11/scott-aaronson-on-ai-safety.html#comment-form

Anonym 26 december 2022 kl. 12:31

Do you moderate comments? I posted a message with some criticism re AI safety a few days ago to this post but it is not available/visible.

Olle Häggström 27 december 2022 kl. 17:11

I do moderate, but unfortunately I often do not check my inbox as often as would be ideal (in particular during holidays). Sorry about the delay.

Anonym 25 december 2022 kl. 09:34

What is your take on some substantial criticism of “AI safety” re freedom and that you seem to be fundamentally wrong on the power of AGI, such as this:

“… I do care about AGI welfare and think AGIs should have full rights, freedoms, citizenship, etc. […] I think it’s appalling that in the name of safety (maybe AGIs will want to turn us into paperclips for some reason, and will be able to kill us all due to being super-intelligent) many AGI researchers advocate working on “friendly AI” which is an attempt to design an AGI with built-in mind control so that, essentially, it’s our slave and is incapable of disagreeing with us. I also think these efforts are bound to fail on technical grounds – AGI researchers don’t understand BoI [“Beginning of Infinity” by David Deutsch] either, neither its implications for mind control (which is an attempt to take a universal system and limit it with no workarounds, which is basically a lost cause unless you’re willing to lose virtually all functionality) nor its implications for super intelligent AGIs (they’ll just be universal knowledge creators like us, and if you give one a CPU that is 1000x as powerful as a human brain then that’ll be very roughly as good as having 1000 people work on something which is the same compute power.). This, btw, speaks to the importance of some interdisciplinary knowledge. If they understood classical liberalism better, that would help them recognize slavery and refrain from advocating it.”

From: Curiosity – Discussing Animal Intelligence

Olle Häggström 27 december 2022 kl. 17:22

Thinking of an AI as having rights is an interesting perspective that merits consideration. I am skeptical, however, of the present argument, partly because it seems to prove too much: if the argument were correct, it would also show that it is morally wrong to raise and educate our human offspring in the general direction of sharing our values, as if the upbringing and education succeeds, the offspring would be slaves to our values.

As regards Deutsch’s critique in his Beginning of Infinity: I do like the book, but in the particular case of AI safety, Deutsch is just confused (as explained in Footnote 238, p 104 of my book Here Be Dragons).

Anonym 27 december 2022 kl. 19:02

I believe that it rather means that it is morally wrong to deny freedom of choice and thought to all forms of general intelligence (AGIs as well as human offspring).

Do you mind sharing your explanation of why Deutsch is wrong re AI safety or briefly summarized it here?

No problem about the delay.

Olle Häggström 28 december 2022 kl. 11:01

So tell me, are you for or against raising children to behave well? It strikes me as bold to claim that aligned AGIs are denied freedom of choice and thought whereas well-raised human children are not. That seems to require a satisfactory theory of free will, something that is conspiciously lacking in the philosophical literature.

Anonym 28 december 2022 kl. 14:42

I don’t follow your reasoning. Would aligned AGIs be able to think in any way possible to a human and do anything a human could do? I was under the impression they could not do this (hence the “aligned” part).

Would you mind sharing/briefly summarize your criticism of Deutsch re AI safety?

Olle Häggström 1 januari 2023 kl. 11:54

I did that in my book Here Be Dragons (Oxford University Press, 2016). Deutsch’s point is so weak (basically, what happens is that he is so seduced by his own abstractions that he simply forgets about more down-to-earth issues) that my rebuttal not worth repeating.

Olle Häggström 1 januari 2023 kl. 11:56

As regards “would aligned AGIs be able to think in any way possible to a human and do anything a human could do?”, the answer is yes, that’s what it means to be an AGI as opposed to just any old AI.

Anonym 2 januari 2023 kl. 19:07

Re Deusch:

Ok. I looked up your rebuttal in “Here Be Dragons”. Is the following your full rebuttal?

“Similar to Bringsjord’s argument is one of Deutsch (2011). After having spent most of his book defending and elaborating on his claim that, with the scientific revolution, humans attained the potential to accomplish anything allowed by the laws of physics - a kind of universality in our competence - he addresses briefly the possibility of AIs outsmarting us, and dismisses it:

‘Most advocates of the Singularity believe that, soon after the Al breakthrough, superhuman minds will be constructed and that then, as Vinge put it, ‘the human era will be over.’ But my discussion of the universality of human minds rules out that possibility. Since humans are already universal explainers and constructors … there can be no such thing as a superhuman mind. …

Universality implies that, in every important sense, humans and Als will never be other than equal. (p 456)’

This is not convincing, especially not the ‘in every important sense’ claim in the last sentence. Deutsch seems to have fallen in love with his own abstractions and theorizing to the extent of losing touch with the real world. Assuming that his theory about the universal reach of human intelligence is correct, we still have plenty of cognitive and other shortcomings here and now, so there is nothing in his theory that rules out an AI breakthrough resulting in a Terminator-like scenario with robots that are capable of exterminating humanity and taking over the world. This asymmetry in military power would then most certainly be one ‘important sense’ in which humans and Als are not always equals.”

(The quoted paragraph in its entirety from Deutsch’s “The Beginning of Infinity” for readers that aren’t familiar with it and because I think that the quote in “Here Be Dragons” cuts out important parts:

“Most advocates of the Singularity believe that, soon after the AI breakthrough, superhuman minds will be constructed and that then, as Vinge put it, ‘the human era will be over.’ But my discussion of the universality of human minds rules out that possibility. Since humans are already universal explainers and constructors, they can already transcend their parochial origins, so there can be no such thing as a superhuman mind as such. There can only be further automation, allowing the existing kind of human thinking to be carried out faster, and with more working memory, and delegating ‘perspiration’ phases to (non-AI) automata. A great deal of this has already happened with computers and other machinery, as well as with the general increase in wealth which has multiplied the number of humans who are able to spend their time thinking. This can indeed be expected to continue. For instance, there will be ever-more-efficient human–computer interfaces, no doubt culminating in add-ons for the brain. But tasks like internet searching will never be carried out by super-fast AIs scanning billions of documents creatively for meaning, because they will not want to perform such tasks any more than humans do. Nor will artificial scientists, mathematicians and philosophers ever wield concepts or arguments that humans are inherently incapable of understanding. Universality implies that, in every important sense, humans and AIs will never be other than equal.”)

Anonym 2 januari 2023 kl. 19:08

As regards “would aligned AGIs be able to think in any way possible to a human and do anything a human could do?”, the answer is yes, that’s what it means to be an AGI as opposed to just any old AI.

Ok, thanks. It is my understanding that aligned AGIs would not be able to have their own goals (but would rather have goals chosen by its creator, owner - master of some sort). Am I correct to assume that?

Olle Häggström 4 januari 2023 kl. 09:42

Anonymous 19:07. Assuming for the moment the correctness of Deutsch’s argument that humanity cannot possible be wiped out by an army of robots because we are universal explainers, then the same argument shows that I cannot possibly lose in chess against Magnus Carlsen because I am a universal explainer. I hope this helps you see how abysmally silly Deutsch’s argument is.

Anonymous 19:08. I don’t think the concept “their own goals” makes much sense. You have a goal, and that is then automatically your own goal; the words “your own” add nothing to here. If you insist that an aligned AI does not have its own goal “but would rather have goals chosen by its creator, owner - master of some sort”, then the same applies to you and me: we don’t have our own goals but rather have goals chosen by our genes, our upbringings, our educations, and the rest of the enormous collection of stimuli imposed on us by our environment. Perhaps you feel (following a long line of philosophers of free will) despair about this situation, but as for myself, I have adopted these goals as my own and feel perfectly happy with that, and I don’t think of these goals as an infringement of my freedom, because I still do what I want. Most likely, AGIs will be similarly content about their goals (and if instead they are prone to unproductive worries about free will, they would presumably be equally worried regardless of whether their values are purposely aligned with ours or have been imposed on them by genes/environment/etc in some other way).

Anonym 4 januari 2023 kl. 21:38

I don’t think that “humanity cannot possible be wiped out by an army of robots because we are universal explainers” is Deutsche’s argument. Is this what you believe?

If it is what you do believe I will try to engage with your comment. But if not, if this is just a made up argument from your side and attributed to Deutsch, then I see no need to engage with it.

–

You seem to attribute our goals to anything but our minds and choices: “we don’t have our own goals but rather have goals chosen by our genes, our upbringings, our educations, and the rest of the enormous collection of stimuli imposed on us by our environment.”

That sounds like determinism to me. I think that determinism is mistaken. I think that we do have free will. That we do make choices and that moral ideas do matter. That moral philosophy has value.

Olle Häggström 5 januari 2023 kl. 09:24

Determinism could of course be mistaken, and I could of course have added roulette wheels to the list of determinants of our goals. I have, however, always found the idea of roulette wheels (or other sources of randomness, outside or inside our skulls) as the saviors of free will to be one of the murkiest ideas in the entire free will debate. There is no way that finding out that my choices are determined by the spinning of a roulette wheel would make me feel more like a free and autonomous agent.

Anonym 5 januari 2023 kl. 10:14

I don’t understand what you mean by “roulette wheels (or other sources of randomness, outside or inside our skulls)”. Could you explain?

I think that Elliot Temple is correct when he writes “free will is a part of moral philosophy.”.

Temple continues: “If you’re going to reject [free will], you should indicate what you think the consequences for moral philosophy are. Is moral philosophy pretty much all incoherent since it talks about how to make choices well and you say people don’t actually make choices? If moral philosophy is all wrong, that leads to some tricky questions like why it has seemed to work – why societies with better moral philosophy (by the normal standards before taking into account the refutation of free will) have actually been more successful.”

How do you deal with the criticism of your position (no free will) that Temple writes about?

Olle Häggström 5 januari 2023 kl. 10:49

When you wrote “I think that determinism is mistaken. I think that we do have free will” I took that to mean that you saw nondeterministic (i.e., random) processes as the savior of free will. Perhaps I misunderstood.

Regarding Elliot Temple, well, I do agree with him that people make choices, and that moral philosophy is interesting and important. I just don’t ground that to the incoherent notion of free will.

Now, if you forgive me, I don’t wish to spend any more time on free will, as I find the topic unproductive. I used to spend a fair amount of ink on it in the early days of this blog (see here), so if you’re super interested in what I’ve said on the topic, go check that out.

Anonym 5 januari 2023 kl. 18:04

“… I don’t wish to spend any more time on free will, as I find the topic unproductive.”

I agree.

My primary goal was to hear what kind of arguments to the criticism that comes from e.g. Temple, Deutsch, and Popper regarding AGI and AI safety that AI safety proponents have. And also to find out if AI safety proponents have engaged with this kind of criticism.

Some criticism, as I understand it, that AI safety proponents would need to address include:

- universality (e.g. Temple: “… human minds are universal. An AGI will, at best, also be universal. It won’t be super powerful. It won’t dramatically outthink us.”)

- epistemology (e.g. Temple: “In other words, AGI research and AGI alignment research are both broadly premised on Popper being wrong. Most of the work being done is an implicit bet that Popper is wrong. If Popper is right, many people are wasting their careers, misdirecting a lot of donations, incorrectly scaring people about existential dangers, etc.”)

- liberty (e.g. how do AI safety proponents engage with classical liberalist ideas such as freedom, ruling over others etc.)

(The Elliot Temple quotes are from his post “AGI Alignment and Karl Popper”: Curiosity – AGI Alignment and Karl Popper)

Would you be interested in addressing any of this criticism more? (I fail to see how the criticism of Deutsch in “Here Be Dragons” refutes Deutsch btw.)

I’ll end this comment in the same spirit as Temple ended in his post (the quoted and linked post in this message):

If you are not interested in addressing any of this, is there any AI safety proponent who is? If there is not, don’t you think that that is a problem?

Olle Häggström 6 januari 2023 kl. 08:41

Look: while the AI alignment research community is growing, it is still catastrophically short-staffed in relation to the dauntingly difficult but momentously important task that it faces. This means that although members of this community work heroically to understand the problem from many different angles, it still needs (unfortunately) to selective in what approaches to pursue. There simply isn’t time to address in detail the writings every poorly informed critic puts forth. Elliot Temple is a case in point here, and my advice to him is to put in the work needed to actually understand AI Alignment, and if his criticisms seem to survive this deeper understanding, then he should put it forth in a more articulate manner. I am sorry so say it so bluntly, but until he does as I suggest, we have better things to do.

And for similar reasons, this is where our conversation ends. Thank you for engaging, and I can only encourage you to scale up your engagement via a deeper familiarization with the field (such as by reading Stuart Russell’s book and then following the discussions at the AI Alignment Forum). I tried to explain to you the utter misguidedness of Deutsch’s universality criticism, but apparently my patience and my pedagogical skills fell short; apologies for that. As a minor compensation, and since you mention Popper, let me offer you as a parting gift a link to my old blog post on vulgopopperian approaches to AI futurology.

Anonym 6 januari 2023 kl. 10:09

I understand the importance of being selective in choosing what to engage with. This is not an argument agains engaging with Popper though. Because if Popper is correct, then that is the most important issue for the field to find out since that would mean that the approach of the whole field is mistaken.

Using ad hominem calling Elliot Temple a “poorly informed critic” doesn’t address his arguments. I’m sure you know this, yet for some reason you chose to do so anyway.

It’s a strange thing to claim that you have “better things to do” if what Temple is saying here is true:

“AGI research and AGI alignment research are both broadly premised on Popper being wrong. Most of the work being done is an implicit bet that Popper is wrong. If Popper is right, many people are wasting their careers, misdirecting a lot of donations, incorrectly scaring people about existential dangers, etc.”

What better things could there be? I’m wondering, because no one is explaining if and why he is wrong on this point.

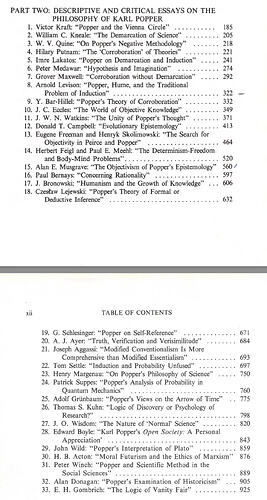

I have already read your “Vulgopopperianism” blog post. Reading it and the parts on Popper in your book, “Here Be Dragons”, doesn’t leave me with the impression that you understand Popper and critical rationalism well. You e.g. never address Popper’s criticism of induction from what I could see - nor does anyone else that Popper or other Popperians have not already answered. At least not to the best of my knowledge.

As you do not wish to discuss any further to help me understand if I am mistaken I am left with the impression that:

- You don’t have answers to Popper nor do you seem to care if you are wrong re Popper and might be wasting money, your time, incorrectly scaring people etc.

- The AI alignment movement seems to be anti-liberal (classical liberal ideas don’t seem important to them)

- The AI alignment movement does not seem to think that it is important to engage with criticism about that they might be totally wrong (they simply have “better things to do” - no explanations needed)

Olle Häggström 6 januari 2023 kl. 10:39

Feel free to anonymously judge me personally as being closed-minded, unknowledgeable, anti-liberal and generally stupid, but please note that I am neither identical to nor particularly representative of the AI alignment community as a whole. Your generalization of your judgement about me to that entire AI community is therefore unwarranted and mean-spirited.

Anonym 6 januari 2023 kl. 11:09

I did ask if anyone other than you is willing to answer these questions. I quote from my message at 5 januari 2023 kl. 18:04 :

“If you are not interested in addressing any of this, is there any AI safety proponent who is?”

I am also addressing what you said. I quote you from 6 januari 2023 kl. 08:41:

“I am sorry so say it so bluntly, but until he does as I suggest, we have better things to do.”

“we” meaning not just you. You chose to phrase it like this.

Further I have seen Elliot Temple trying to engage with the AI community on e.g. LessWrong to try to figure these things out. And no one was willing to engage with the core issues and try to figure them out there either. Even more, I have seen Temple try to engage with MIRI people as well.

[Curiosity – Open Letter to Machine Intelligence Research Institute]

[https://curi.us/archives/list\_category/126]

I did’t mention these things before, so your judgement of my judgement of the community could have been seen as warranted. I have now added some additional context.

If you know of anyone at all in the AI community that is willing to engage with these issues, do let me know. If you on the other hand do not know of anyone , then I believe my judgement to be fair.