I wrote:

I don’t think it can really be changed.

I think all types of abstract, conceptual, logical or mathematical thinking are learnable skills which are a significant part of what learning about rationality involves. As usual, I have arguments and I’m open to debate.

I have put substantial effort into teaching some of this stuff, e.g. by building from sentence grammar trees (focused on understanding a sentence) to paragraph trees (focused on understanding relationships between sentences) to higher level trees (e.g. about relationships between paragraphs). There are many things people could do to practice and get better at things. I’ve found few people want to try very persistently though. Lots of people keep looking around for things where they can have some sort of immediate success and avoid or give up on stuff that would take weeks (let alone months or years). Also a lot of people focus their learning mostly on school subjects or stuff related to their career.

I’m concerned that most people on EA are too intolerant or uncurious talk to people with large differences in perspective.

Oh, yes, to a certain degree, like most people. But less than most people in my opinion.

I don’t disagree with that. But unfortunately I don’t think the level of tolerance, while above average, is enough for many of them to deal with me. My biggest concern, though, is that moderators will censor or ban me if I’m too unpopular for too long. That is how most forums work and EA doesn’t have adequate written policies to clearly differentiate itself or prevent that. I’ve seen nothing acknowledging that problem, discussing the upsides and temptations, and stating how they avoid it while avoiding the downsides of leaving people uncensored and unbanned. Also, EA does enforce various norms, many of which are quite non-specific (e.g. civility), and it’s not that hard to make an excuse about someone violating norms and then get rid of them. People commonly do that kind of thing without quoting a single example, and sometimes without even (inaccurately) paraphrasing any examples. And if someone writes a lot of things, you can often cherry pick a quote or two which is potentially offensive, especially out of the long discussion context it comes from.

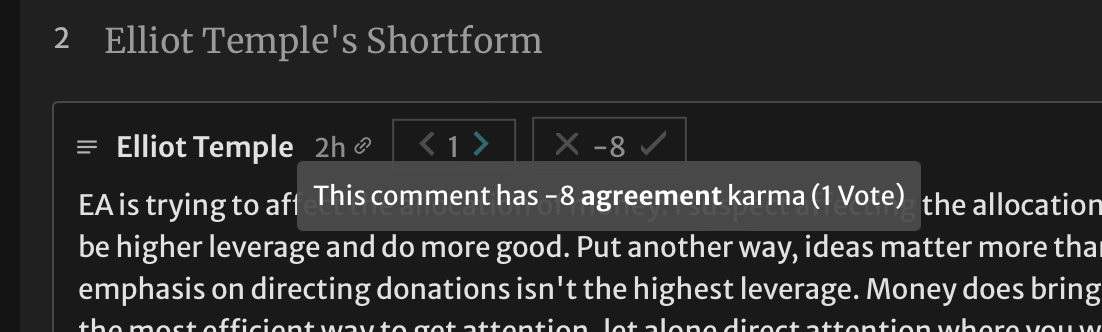

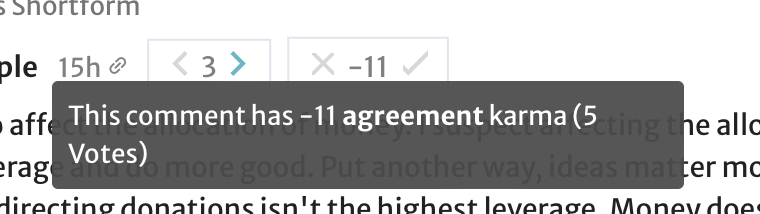

Things like downvotes can be early warning signs of harsher measures. If someone does the whole Feynman thing and doesn’t care what other people think, and ignores downvotes, people tend to escalate. They were downvoting for a reason. If they can’t socially pressure you into changing with downvotes, they’ll commonly try other ways to get what they want. On a related note, I was disappointed when I found out that both Reddit and Hacker News don’t just let users vote content to the front page and leave it at that. Moderators control what’s on the front page significantly. When the voting plus algorithm gets a result they like, they leave it alone. When they don’t like the result, they manually make changes. I originally naively thought that people setting up a voting system would treat it like an explicit written policy guarantee – whatever is voted up the most should be on top (according to a fair algorithm that also decays upvotes based on age). But actually there are lots of unwritten, hidden rules and people aren’t just happy to accept the outcome of voting. (Note: Even negative karma posts sometimes get too much visibility on small forums or subreddits, thus motivating people to suppress them further because they aren’t satisfied with the algorithm’s results. Some people aren’t like “Anyone can see it’s got -10 karma and then make a decision about whether to trust the voters or investigate the outlier or what.” Some people are intolerant and want to suppress stuff they dislike.)

I don’t know somewhere else better to go though. And my own forum is too small.

That’s why I recommand examples of policies.

I will post more examples. I have multiple essays in progress.

You might be interested in that link.

Broadly, if EA is a place where you can come to compete with others at marketing your ideas to get social status and popularity, that is a huge problem. That is not a rationality forum. That’s a status hierarchy like all the others. A rationality forum must have mechanisms for unpopular ideas to get attention, to disincentivize social climbing behaviors, to help enable people to stand up to, resist or call out social pressures, etc. It should have design features to help attention get allocated in other ways besides whatever is conventionally appealing (or attention grabbing) to people that marketing focuses on.

One of the big things I think EA is missing – and I have the same complaint about basically everyone else (again it’s not a way EA is worse) – is anyone who takes responsibility for answering criticism. No one in particular feels responsible for seeing that anyone answers criticism or questions. Stuff can just be ignored and if that turns out to be a mistake, it’s no one’s fault, no one is to blame, it was no one’s job to have avoided that outcome. And there’s no attempt to organize debate. I think a lot of debate happens anyway but it’s systematically biased to be about sub-issues instead of questioning people’s premises like I do. Most people learn stuff (or specialize in it) based on some premises they don’t study that much, and then they only want to have debates and address criticism that treats those premises as givens like they’re used to, but if you challenge their fundamental premises then they don’t know what to do, don’t like it, and won’t engage. And the lack of anyone having responsibility for anything, combined with people not wanting to deal with fundamental challenges, results in basically EA being fundamentally wrong about some issues and staying that way. People tend not to even try to learn a subject in terms of all levels of abstraction, from the initial premises to the final details, so then they won’t debate the other parts because they can’t, which is a big problem when it’s widespread. E.g. all claims about animal welfare, AI alignment, or clean water interventions depend in some way on epistemology. Most people who know something about factory farms do not know enough to defend their epistemological premises in debate. Even if they do know some epistemology, it’s probably just Bayesian epistemology and they aren’t in a position to debate with a Popperian about fundamental issues like whether induction works at all, and they haven’t read Popper, and they don’t want to read Popper, and they don’t know of any literature which refutes Popper that they can endorse, and they don’t know of any expert on their side who has read Popper and can debate the matter competently … but somehow that’s OK with them instead of seeming awful. Certainly almost everyone who cares about factory farms would just be confused instead of thinking “omg, thanks for bringing this up, I will start reading Popper now”. And of course Popperian disagreements are just one example of many. And even if Popper is totally right about epistemology and Bayes is wrong, what difference does that make to factory farming? That is a complex matter and it’d take a lot of work to make all the updates, and a lot of the relevance is indirect and requires complicated chains of reasoning to get from the more fundamental subject to the less fundamental one. But there would very likely be many updates.

But I think what they would really like is a straightforward way of doing that, with proven results.

It’s too much to ask for. We live in an inadequate society as Yudkowsky would say. Rationality stuff is really, really broken. People should be happy and eager to embark on speculative rationality projects that involve lots of hard work for no guaranteed results – because the status quo is so bad and intolerable that they really want to try for better. Anyone who won’t do that has some kind of major disagreement with not only me but also, IMO, Yudkowsky.

Basically, to see that your approach works. Right now they have no way of knowing whether what you propose provides good results. People tend to ignore problems for which there is no good solution, so, in addition to saying that something is conceptually important, you have to provide the solution.

One way to see that my approach works is that I will win every single debate including while making unexpected, counter-intuitive claims and challenging widely held EA beliefs. But people mostly won’t debate so it’s hard to demonstrate that. Also even if people began debates, they would mostly want to talk about concrete subjects like nutrition or poverty, not about debate methodology. But debating debate methodology basically has to come first, followed by debating epistemology, because the other stuff is downstream of that. If people are reasonable enough and acknowledge their weaknesses and inabilities you can skip a lot of stuff and still have a useful discussion, but what will end up happening with most people is they make around one basic error per paragraph or more, and when you try to point one out they make two more when responding, so it becomes an exponential mess which they will never untangle. They have to improve their skills and fundamentals, or be very aware of their ignorance (like some young children sorta are), before they can debate hard stuff effectively. But that’s work. By basic errors I mean things like writing something ambiguous, misreading something, forgetting something relevant that they read or wrote recently, using a biased framing for an issue, logical errors, mathematical errors, factual errors, grammar errors, not answering questions, or writing something different than what they meant. In a world where almost everyone does those types of errors around once a paragraph or more, in addition to being biased, and also not wanting to debate … it’s hard. Also people frequently try to write complex stuff, on purpose, despite lacking the skill to handle that complexity, so they just make messes.

The other way to see it works, besides debating me, is to consider it conceptually. It has reasoning. As best I know, there are criticisms of alternatives and no known refutations of my claims. If anyone knows otherwise they are welcome to speak up. But that’d require things like reviewing the field, understanding what I’m saying, etc. Which gets into issues of how people allocate attention and what happens when no one even tries to refute something because a whole group of people all won’t allocate attention to it and there’s no leader who takes responsibility for either engaging with it or delegating.

Well that was more than enough for now so I’ll just stop here. I have a lot of things i’d be interested in talking about if anyone was willing, and i appreciate that you’re talking with me. I could keep writing more but I already wrote 4600 words before this 1800 so I really need to stop now.