EDIT/UPDATE: Find all my EA related stuff at Curiosity – Effective Altruism Related Articles

I asked Effective Altruism (EA) whether they have rational debate methodology.

I have some agreements and disagreements with EA. I’m considering potential discussion. I have questions about that.

Is there a way here to get organized, rational debate following written methodology? I would want a methodology with design features aimed at rationally reaching a conclusion.

I’m not looking for casual, informal chatting that doesn’t try to follow any particular rules or methods. In my experience, such discussions are bad at being very rational or reaching conclusions. I prefer discussion aimed at achieving specified goals (like conclusions about issues) using methods chosen on purpose to be appropriate to the goals. I’m willing to take on responsibility and commitments in discussions, and I prefer to talk with people who are also willing to do that.

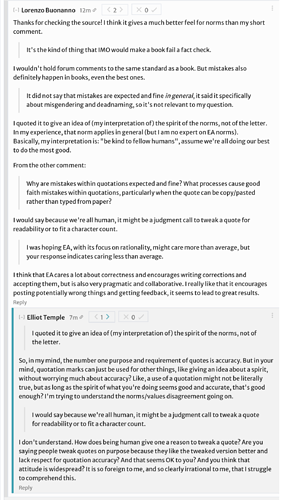

I understand that You don’t have to respond to every comment is a standard attitude here. I think it’s good for that type of discussion to exist where people may ignore whatever ideas they want to with no transparency. I think that should be an available option and it’s an appropriate default. But I don’t think it should be the only type of discussion, so I’m asking about the availability of alternatives.

Some issues I would expect a debate methodology to address are:

- Starting and stopping conditions

- Topic branching

- Meta discussion

- Prioritization

- Bias

- Dishonesty

- Social status related behaviors

- Transparency

- A method of staying organized, including tracking discussion progress and open issues

- Replying to long messages without reading them in full

- The use of citations and quotes rather than writing new arguments

- When it’s appropriate to expect a participant to read literature before continuing (or study or practice), or more broadly the issue of asking people to do work

- Handling when a participant is missing some prerequisite knowledge, skill or relevant specialization

- Can debates involve more than two people, and if so how is that handled?

- What should you do if you think your debate partner is making a bunch of errors which derail discussion?

- Should messages ever be edited after being read or replied to?

- What to do if you think a participant violates the methodology?

- What to do if you believe a participant insults you, misquotes you, or repeatedly says or implies subtly or ambiguously negative things about you?