Summary of Resolving Conflicting Ideas :

An important problem in philosophy is how to resolve conflicting ideas. Conflicting ideas come up in a lot of places: when making decisions, taking action, between minds, and within minds. CF says to resolve these conflicting ideas by creating new win/win solutions that address each side to the conflict, not by picking winners and losers of the conflict. We can do this by mentally modelling the ideas as people in a discussion, with us being the discussion’s neutral arbiter.

Notes:

Elliot thinks the problem of how to rationally resolve conflicts between ideas is one of the most important issues in philosophy.

problem: what should we do about the fact that we have lots of ideas that conflict/disagree with each other? implied answer: we should resolve the conflicts, and figure which ideas to accept and reject.

why? presumably because we rely on our ideas and knowledge for everything we do, so we want knowledge of good quality that wont lead us into error. Errors and conflicts in our knowledge will thwart our intentions and make our action ineffective or counter-productive. If we’re rational we want to pursue the truth. True ideas don’t conflict with each other, so resolving conflicts is truth-seeking (if we have conflicting ideas, we know that at least one of them is false).

problem: how do we evaluate the ideas and figure out what to accept?

Rather than trying to pick winners and losers among ideas, Critical Fallibilism says we should find win/win solutions which address all the good points raised by all the conflicting ideas.

So according to CF, picking which ideas in the conflict win or lose is wrong. Simply evaluating the existing, conflicting ideas and choosing one to accept is the wrong approach, according to CF. It advocates creating new ideas which resolve the conflict, not deciding between the existing, conflicting ideas. It sees the conflicting ideas, as they are, as inadequate in some way?

(Is this because a problem that each of the conflicting ideas have is that they are inadequate to address the conflict between them? (because otherwise there would be no conflict?) Like, maybe the fact that they conflict is a criticism of each of the ideas? I’m not sure about that idea. I don’t yet understand why just picking between the two ideas is wrong.)

So we can model the conflicting ideas within a mind or between multiple minds as people discussing, for whom we are the neutral arbiter. We try and find a solution that each party to the discussion is happy with: a new idea that both prefer to their original idea. We don’t side with one party over the other.

Elliot then links to a bunch of other articles that explain more about the ideas in the article.

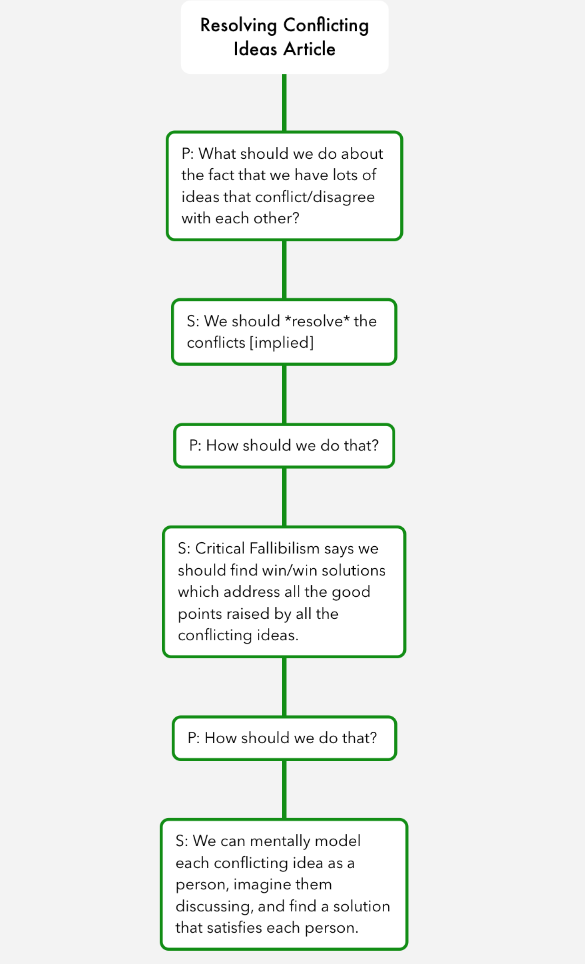

Here is a tree that just focuses on problems and solutions brought up in the part of the article before the link section: